Research Project

Campus Autonomous Robot Tours (CART)

Lead Researchers:

- Amiel Hartman, Mechanical Engineering*

- Nhut Ho, Mechanical Engineering*

- Subhobrata Chakraborty, CS*

- Ashley Geng, Electrical and Computer Engineering

- Li Liu, Computer Science

- Joe Bautista, Art + Design

Note: names marked with an asterisk (*) indicate current researchers

Collaborator:

- Ali-akbar Agha-mohammadi, JPL collaborator

Student Team:

- Hariet Yousefi ME*

- Shari Salas, ME*

- Coulson Aquirre

- M. Fadhil Ginting

- Kyle Strickland

- CART student team, from Systems Engineering Research Laboratory (SERL) senior design projects and ME 486 senior design course

Note: names marked with an asterisk (*) indicate current students

Funding

- Funding Organization: NASA

- Funding Program: MIRO

SYNOPSIS

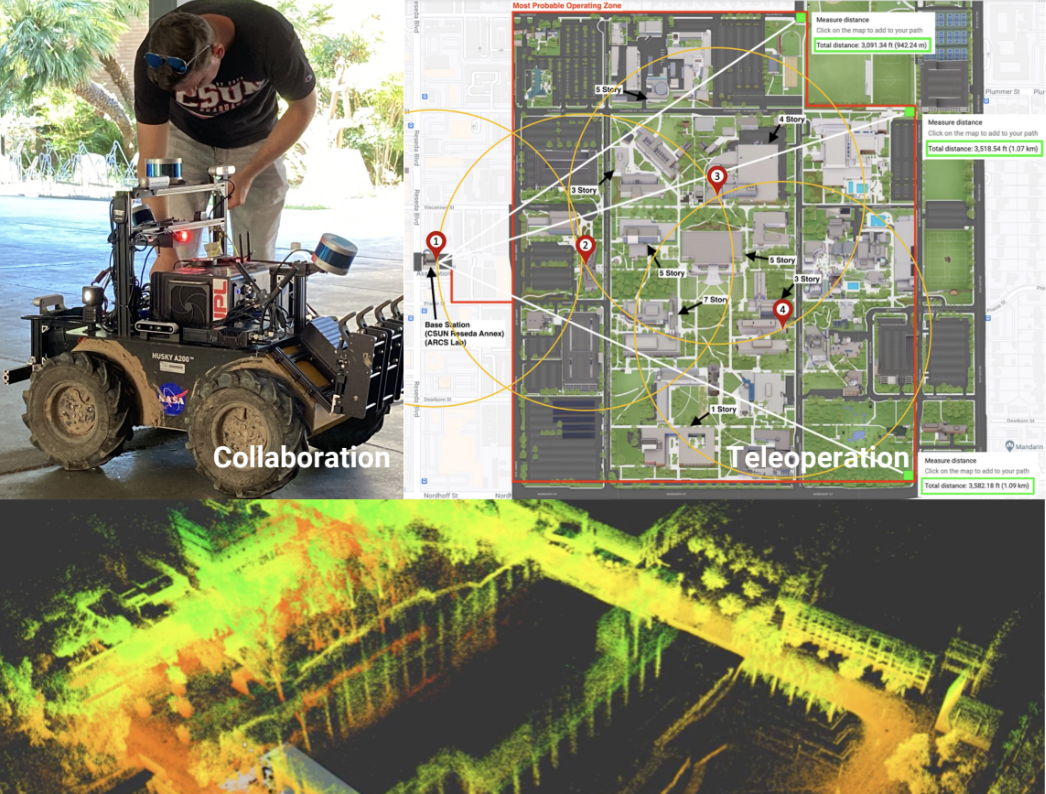

- Develop an autonomous robot system that can deliver tours of the CSUN campus, inspired by NASA JPL NeBula compatible software framework, showcasing STEM technology to the campus and surrounding community.

- Integrate robot tours with University campus tours operations.

- Automate the tour process for in-person and virtual tours with robot interaction.

- Amiel Hartman, Mechanical Engineering

- Nhut Ho, Mechanical Engineering

- Ashley Geng, Electrical and Computer Engineering

- Li Liu, Computer Science

- Joe Bautista, Art + Design

Collaborator:

- Ali-akbar Agha-mohammadi, JPL collaborator

Student Team

- M. Fadhil Ginting

- Coulson Aquirre

- Kyle Strickland

- CART student team, from Systems Engineering Research Laboratory (SERL) senior design projects and ME 486 senior design course

Funding

- Funding Organization: NASA

- Funding Program: MIRO

Alignment, Engagement and Contributions to the priorities of NASA’s Mission Directorates

JPL team CoSTAR uses the NeBula autonomy solution on wheeled and legged UGVs to participate in the DARPA subterrain (SubT) challenge for research tasks aligned with robot teaming and exploration on NASA space missions. In collaboration with JPL, the CART project aims to develop a UGV system that can showcase robotics technology to the university community by providing interactive tours of the campus and serving as a research platform for autonomy-assisted teleoperation. Dense urban environments are challenging scenarios for wireless communication in the absence of a network infrastructure and can suffer from latency or bandwidth limitations. However, live data streaming to a ground control station for display and teleoperation is desirable for an interactive tour guide system. UGV missions in dynamic environments with pedestrians require autonomous obstacle avoidance and fault-tolerant safety systems to avoid undesirable collisions.

Research Questions and Research Objectives

Questions:

How do we use a robot platform to create a live, interactive, virtual tour that is engaging for visitors to the university and ARCS? How do we integrate the robot tour guide system into the existing infrastructure for tours at the university? How do we integrate the interactive tour display into the ARCS gallery space to showcase robotics technology? How do we safely implement teleoperation with autonomy in an urban environment?

Objective:

-

Develop software infrastructure inspired from the autonomy framework developed by team CoSTAR for the DARPA Subterranean (SubT) Challenge.

-

Mechatronic design of sensing and wireless communication system payload onboard an unmanned ground vehicle.

-

Integrate robot perception sensor data into base station and user interface for remote operation at ARCS gallery space to enhance visitor interaction during virtual campus tours.

Research Methods

-

Build software model of CSUN campus to test robot navigation and autonomy in simulated urban environments.

-

Explore the interaction of GPS waypoint navigation with autonomous path planning and obstacle avoidance behavior to achieve safe operation during campus tour scenarios with human robot interaction.

-

Field test sensor payload configurations and software for autonomous navigation on mobility platforms to enhance robot performance.

Research Deliverables and Products

-

Enhanced robot perception payload for mapping the environment

-

Expanded user interface remote operation and virtual tour experience

-

Mobility behavior improvements for human-robot interaction

Commercialization Opportunities

-

Application: Industrial inspection, remote exploration, tour guide

-

Key Values: Automate operations with remote oversight and which require less onsite personnel

-

Potential Customers: Manufacturing, museums, mining, search and rescue

Research Timeline

Start Date: June 2020

End Date:

Lead Researchers:

- Amiel Hartman, Mechanical Engineering*

- Nhut Ho, Mechanical Engineering*

- Subhobrata Chakraborty, CS*

- Ashley Geng, Electrical and Computer Engineering

- Li Liu, Computer Science

- Joe Bautista, Art + Design

Note: names marked with an asterisk (*) indicate current researchers

Collaborator:

- Ali-akbar Agha-mohammadi, JPL collaborator

Student Team:

- Hariet Yousefi ME*

- Shari Salas, ME*

- Coulson Aquirre

- M. Fadhil Ginting

- Kyle Strickland

- CART student team, from Systems Engineering Research Laboratory (SERL) senior design projects and ME 486 senior design course

Note: names marked with an asterisk (*) indicate current students

Funding

- Funding Organization: NASA

- Funding Program: MIRO