Research Project

Object Recognition and Interpretation for Visually Impaired Assistance

(Perceptual Augmentation: Object Recognition and Interpretation for Visually Impaired Assistance)

Research Team

Lead Researchers:

-

Dr. Abhishek Verma, Computer Science

Collaborators:

Student Team:

- Gurnoor Kaur, BS Computer Science

- Kate Hagen, BS Computer Science

- Manuel Negrete, BS Computer Science

- Edward Shatverov, BS Computer Science

- S Abrar Nizam, BS Computer Science

Funding

- Funding Organization:

- Funding Program:

SYNOPSIS

- This research focuses on designing and implementing the Vision Assist mobile app to help visually impaired people by recognizing objects and reading text aloud.

- It uses a phone’s camera and machine learning to give real-time voice feedback for easier navigation of surroundings.

- The app also includes GPS directions and an emergency alarm for added safety.

Research Questions and Research Objectives

-

Develop an assistive technology solution that improves the independence of visually impaired users through object detection and text interpretation.

-

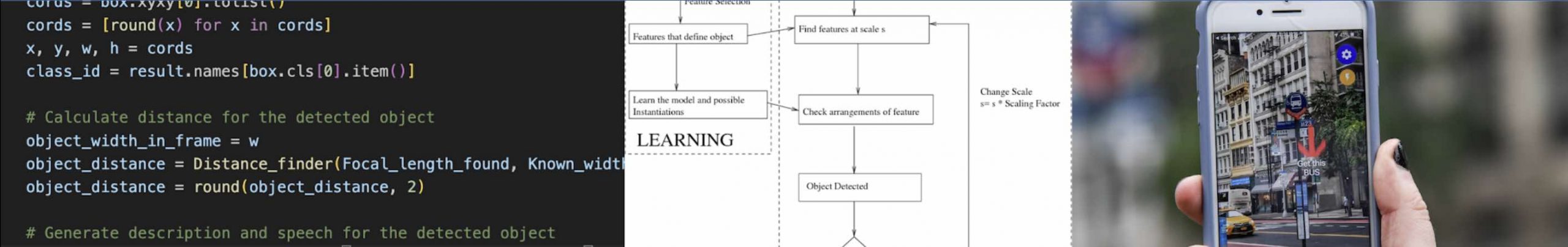

Enhance real-time environment awareness using a smartphone’s camera and machine learning algorithms.

-

Implement a user-friendly, accessible interface that provides clear voice feedback for navigation and object recognition.

Research Methods

-

Conducted interviews with visually impaired individuals to learn about their daily navigation challenges.

-

Used the YOLO machine learning model to accurately identify objects in the environment.

-

Employed Google Cloud API to convert text into clear speech for users.

-

Integrated GPS navigation with Apple Maps to provide real-time voice directions.

-

Added an emergency alarm feature that connects users to their emergency contacts using Twilio.

Research Results and Deliverables

-

Developed a functional prototype that effectively identifies objects and reads text.

-

Integrated voice-guided GPS navigation for enhanced user independence.

-

Implemented an emergency alarm feature to ensure user safety.

Commercialization and/or Societal Impact Opportunities

- Application: Launch the app as a vital tool for visually impaired individuals.

- Key Value: Increases independence and enhances safety in everyday situations.

- Potential Users: Visually impaired individuals, organizations focused on accessibility

Research Timeline

Start Date:

End Date:

Lead Researchers:

-

Dr. Abhishek Verma, Computer Science

Collaborators:

Student Team:

- Gurnoor Kaur, BS Computer Science

- Kate Hagen, BS Computer Science

- Manuel Negrete, BS Computer Science

- Edward Shatverov, BS Computer Science

- S Abrar Nizam, BS Computer Science

Funding

- Funding Organization:

- Funding Program: